In the previous article we were analyzing the benefits of designing a process where mixing BDD with Performance Testing would make total sense. As I like to believe, apart from generating wild ideas, I make them happen. Some long time ago the NightCodeLabs team was formed and we developed a solution to this challenge.

We chose the Java ecosystem because the team was professionally using this programming language back when the project was started, and we were aiming to solve a need we might have had at some point in our day-to-day jobs.

In this article you’ll get a general overview on how the solution works and how you can actually reuse the existing BDD functional test code and write performance ones.

Architecture

The Test Project

A java maven project containing BDD scenarios in Cucumber and a test automation framework of your choice (eg: rest-assured, Selenium, etc). This is where the Performance Tests will live.

Pretzel

The test framework we’ll use in order to make our lives easier while attempting to do load and performance testing with BDD. Pretzel starts and terminates both the worker and master instances between each test, to allow the rest of the communication to happen and to be able to get individual results for each of the scenarios. Apart from that, the rest of the communication is done just with the worker: it sends out the information on what needs to be tested, it checks the status of the execution and once the execution is finished, it reads the results from the generated .csv file. Once this is done, the instances are terminated, and the cycle is repeated if new scenarios are to be executed.

Master

This is where all the magic is happening. The whole solution uses Locust to generate multiple users, to control how many of them are active per second, etc. Locust originally is a python based load testing tool, which happily allows to build clients in different programming languages so anyone can use it in their own technological ecosystem. There are different clients already out there for languages such as go or java. If you don’t find one, you could build your own.

Even though the project itself and the code doesn’t need to have anything related to python, in order for the whole solution to work, you’ll still need to have python installed in the machine you’ll be executing, together with a version of locust.

Worker

This is the client which interacts with the master instance in order to translate the java code into something which locust can understand. The worker featured in Pretzel is locust4j, which is doing a super great job as a client in a simple project setup, but has it’s challenges in integrating in slightly more complex architectures that include BDD with Service/Page Objects. At NightCodeLabs we were trying to have it integrated by default in the Test Project, but we turned out with a lot of code which is now Pretzel. Finally, locust4j lives as a dependency in Pretzel.

Your first Performance Test with BDD

Notes: The paragraphs to follow is based on the example project we’ve build at NightCodeLabs. Both the repo and the below guide are meant to show how this can work, rather to demonstrate a perfect BDD example or testing patterns. Before starting, make sure you go through the installation guide. Grab a beer or a coffee, as installing locust for the first time might take a while. Or avoid all of that and just launch the docker container.

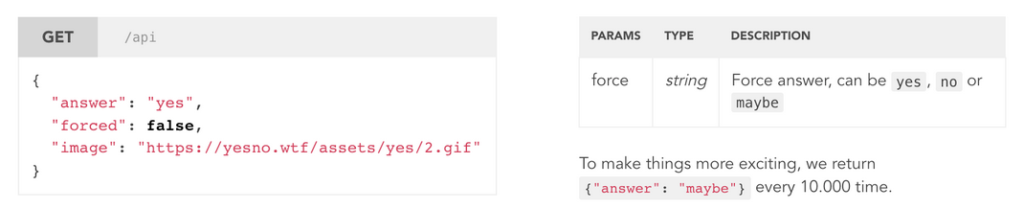

About the system in test

For this example we have chosen to do some API testing, based on this simple and public API. This is their documentation:

We already have the following functional test case, which we’d like to reuse into a Performance one:

Feature: Functional Test

@Functional

Scenario: Request a Forced yes Answer

When a forced yes is requested

Then the corresponding yes answer is returned

This feature file sits in a project with the following folder structure:

-- /src/main/java

/serviceobjects/ForcedAnswer.java

-- /test/java

/runner/TestRunner.java

/steps/Definitions.java

-- /test/resources

/Functional.feature

-- pom.xml

Behind the Functional Test, we have the following code:

package steps;

import cucumber.api.java.en.Then;

import cucumber.api.java.en.When;

import serviceobjects.ForcedAnswer;

public class Definitions {

ForcedAnswer forcedAnswer = new ForcedAnswer();

@When("^a forced (.+) is requested$")

public void aForcedAnswerTypeIsRequested(String answerType) {

forcedAnswer.aForcedAnswerTypeIsRequested(answerType);

}

@Then("^the corresponding (.+) answer is returned$")

public void theCorrespondingAnswerTypeIsReturned(String answerType) {

forcedAnswer.theCorrespondingAnswerTypeIsReturned(answerType);

}

}The Service Object class which holds all the methods for forcing an answer inside the API is using the RestAssured testing library. You can use whatever you like here, including UI Testing.

package serviceobjects;

import io.restassured.RestAssured;

import io.restassured.http.Method;

import io.restassured.path.json.JsonPath;

import io.restassured.response.Response;

import io.restassured.specification.RequestSpecification;

import org.junit.Assert;

public class ForcedAnswer {

public ForcedAnswer() {}

private String requestAnswer;

public void aForcedAnswerTypeIsRequested(String answerType) {

RestAssured.baseURI = "https://yesno.wtf/api";

RequestSpecification httpRequest = RestAssured.given();

Response response = httpRequest.request(Method.GET, "/?force=" + answerType);

JsonPath answer = response.getBody().jsonPath();

System.out.println(answer.prettyPrint());

requestAnswer = answer.getString("answer");

}

public void theCorrespondingAnswerTypeIsReturned(String answerType) {

Assert.assertEquals("Correct answer returned", answerType , requestAnswer);

}

}Adding the Performance Test

First of all, add the pretzel dependency to your maven project:

<dependency>

<groupId>com.github.nightcodelabs</groupId>

<artifactId>pretzel</artifactId>

<version>0.0.2</version>

</dependency>Let’s add the BDD Magic to Performance Testing:

Feature: Performance Test

@Performance

Scenario: Request a forced yes answer

When 100 users request a forced yes at 10 users/second for 1 min

Then the answer is returned within 10000 millisecondsLooks cool, right? Let’s update our existing step definitions. To make it work, we’ll need to:

- import pretzel, and create a new instance of it

- use the doPretzel for the When (to understand better what each parameter is used for, you can check out locust4j documentation). For simplicity, we recommend using the value of your maximum users as also the weight and the maxRPS

- use the checkMaxResponseTImeAboveExpected for the Then. The scenario we’re designing will fail just in case the responses take more than the expected time. This can be further on enhanced to fail if there are a certain quantity of failures while doing the requests, if the failures/second are above a certain threshold, or any other crazy combination you could think of.

package steps;

import cucumber.api.java.en.Then;

import cucumber.api.java.en.When;

import org.junit.Assert;

import com.github.nightcodelabs.pretzel.Pretzel;

import serviceobjects.ForcedAnswer;

public class Definitions {

Pretzel pretzel = new Pretzel();

ForcedAnswer forcedAnswer = new ForcedAnswer();

@When("^a forced (.+) is requested$")

public void aForcedAnswerTypeIsRequested(String answerType) {

forcedAnswer.aForcedAnswerTypeIsRequested(answerType);

}

@Then("^the corresponding (.+) is returned$")

public void theCorrespondingAnswerTypeIsReturned(String answerType) {

forcedAnswer.theCorrespondingAnswerTypeIsReturned(answerType);

}

@When("^(.+) users request a forced yes at (.+) users/second for (.+) min$")

public void usersRequestForcedYesAnswerAtRateMinute(Integer maxUsers, Integer usersLoadPerSecond, Integer testTime) throws Throwable {

pretzel.doPretzel(maxUsers,usersLoadPerSecond, testTime, maxUsers, maxUsers, "ForcedYes");

}

@Then("^the answer is returned within (.+) milliseconds$")

public void theAnswerIsReturnedWithingMilliseconds(Long expectedTime) {

Assert.assertFalse(pretzel.checkMaxResponseTimeAboveExpected(expectedTime));

}

}You’ll notice that up until now we haven’t reused any code, and you’re wondering most probably where will all of that go. Don’t worry, actually this will go into into the “ForcedYes” class we’ll need to create (and which pretzel knows how to read):

- in src/main/java create a pretzel/ForcedYes.java extending the Task from Pretzel

- inside the execute block, add the code from the service objects

- call performance.recordSuccess and recordFailure (in a try/catch block). Like this, we are able to record if we start getting other types of responses meanwhile we execute the test on extreme load

Finally the class would looks something like this:

package pretzel;

import com.github.nightcodelabs.pretzel.performance.Task;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import serviceobjects.ForcedAnswer;

public class ForcedYes extends Task {

private static final Logger logger = LoggerFactory.getLogger(ForcedYes.class);

private int weight;

ForcedAnswer forcedAnswer = new ForcedAnswer();

public ForcedYes(Integer weight){

this.weight = weight;

}

@Override

public int getWeight() {

return weight;

}

@Override

public String getName() {

return "Forced Yes";

}

@Override

public void execute() {

try {

forcedAnswer.aForcedAnswerTypeIsRequested("yes");

forcedAnswer.theCorrespondingAnswerTypeIsReturned("yes");

performance.recordSuccess("GET", getName(), forcedAnswer.getResponseTime(), 1);

} catch (AssertionError | Exception error){

performance.recordFailure("GET",getName(), forcedAnswer.getResponseTime(),"Yes has not been returned");

logger.info("Something went wrong in the request");

}

}

}That’s it! Now we’re all set to actually run it.

Buuuut… we’re missing something: the reports. Let’s say we’re using a version of extentreports, and we want to integrate the graphs generated by pretzel inside that report. It’s simple:

- update the runner, initiating the report directory inside the TestRunner class:

private static Pretzel pretzel = new Pretzel();

@BeforeClass

public static void beforeClass() {

pretzel.initiateReportDirectory();

}

- in the steps folder, add a Hooks.java class, attaching the graphs of performance scenarios in the extentreports.

package steps;

import java.io.IOException;

import com.vimalselvam.cucumber.listener.Reporter;

import cucumber.api.Scenario;

import cucumber.api.java.After;

import com.github.nightcodelabs.pretzel.Pretzel;

public class Hooks {

Pretzel pretzel = new Pretzel();

@After(order = 0)

public void AfterSteps(Scenario scenario) throws IOException {

if (scenario.getSourceTagNames().contains("@Performance")) {

Reporter.addScreenCaptureFromPath(pretzel.getGeneratedChartFilePath(),"Performance Results");

}

}

}Now we are really ready to run it.

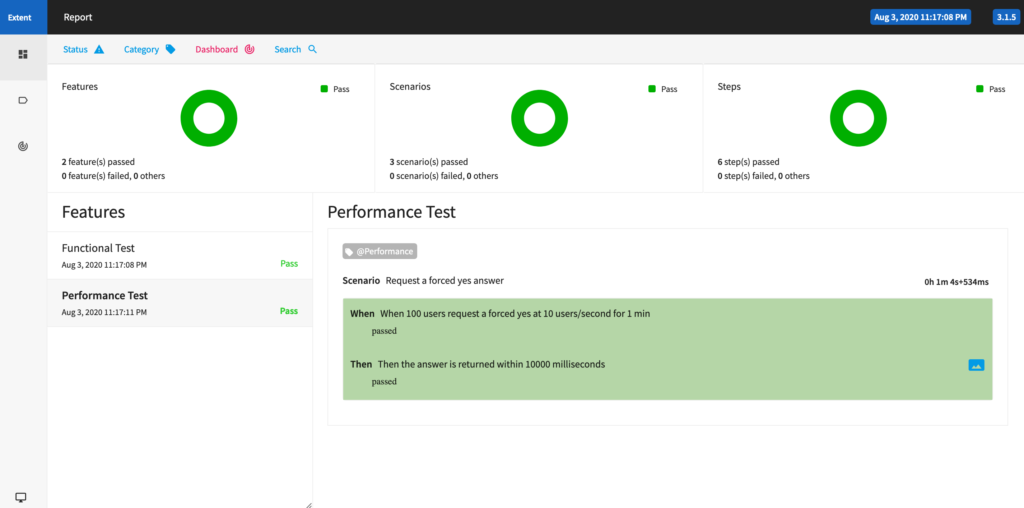

After the execution is finished we can see that the Performance Test Feature is part of the Extent Reports:

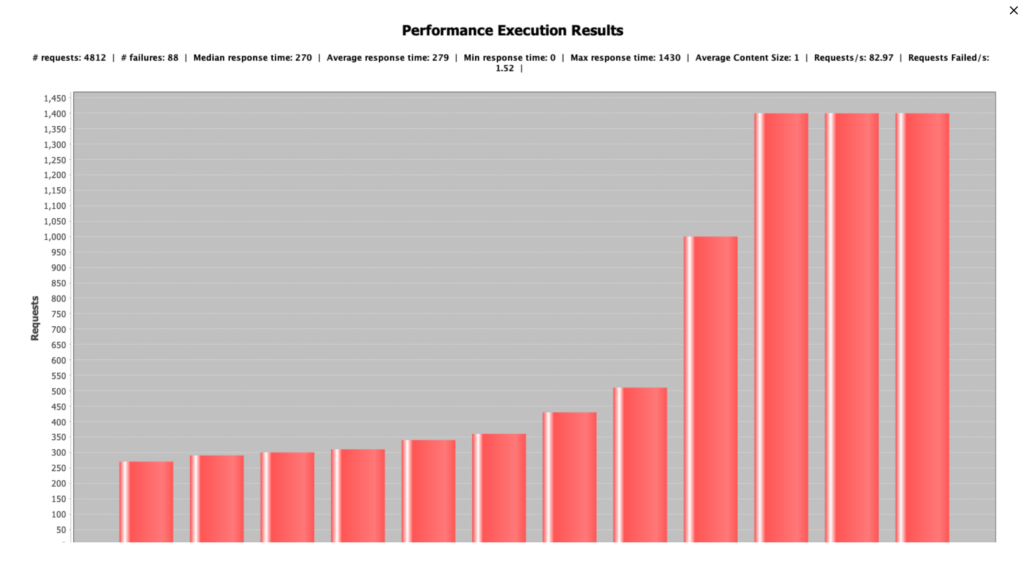

and that the last step of the Scenario has an image attached, which, if you’re executing Load and Performance Testing, you’ll be interested to analyze:

Further Work

Not-so-humbly speaking, I am pretty happy with what pretzel can do, but the current design does come with some improvable points:

- concurrency – the current implementation allows just sequential executions

- it’s heavy – it can be executed just in the same machine where the test code is running, and, depending on what scenarios one might have, this can prove quite expensive if you’re running it super frequent on your CI/CD

- it has that extra code (src/main/java/pretzel) that extends the Task, which could be avoided

- as any other test automation tool, it tells you there are problems, but it doesn’t tell you why

All of these points can definitely be improved if there is enough interest in the tool out there. Care to contribute with a PR? We can make this great together!